Gemini – tech stack

Google explains differences and overlap between tech stack of Search, AI Mode/Overviews and Gemini. The main point is that there is so much overlap and therefore same knowledge which can be used for optimising for Google Search is also valuable/transferable to thinking of how Google’s Gemini model works.

AI generated images

“Should I disallow crawling of Al generated images?” Google said “Up to you! We will serve users Al generated images in search results if they’re looking for them specifically.”

Here is some more information about AI generated content …..

| Date | Milestone / Statement | Citations |

|---|---|---|

| February 2023 | Google states using AI-generated content purely to manipulate rankings violates spam policies. AI content must be helpful and original. | Google Search’s guidance about AI-generated content |

| December 2023 | John Mueller of Google advises against using AI generated text that lacks value or originality; implied the same guidance applies to AI images. | Beyond SEO: John Mueller On AI-Generated Images & Stock Photography |

| April 2025 | Google Search Quality Raters Guidelines are updated to have raters assess if content (including images) is AI generated. Pages where the main content is low value or unoriginal regardless of how it was made can receive the lowest quality rating. | Google quality raters now assess whether content is AI-generated |

| January 2025 | Reports emerge in the SEO community of Google Image Search possibly downranking AI generated images that are low quality or widely reused, though there is no official confirmed policy. | Google Image Search Down Ranking AI-Generated Images? |

| July 2025 | For ecommerce sites, Google Merchant Center has policies for AI-generated content. In particular, AI-generated images must contain metadata using the IPTC DigitalSourceType TrainedAlgorithmicMedia metadata. AI-generated product data such as title and description attributes must be specified separately and labeled as AI-generated. | Google Search’s guidance on using generative AI content on your website |

Indexing – “Discovered – currently not indexed”

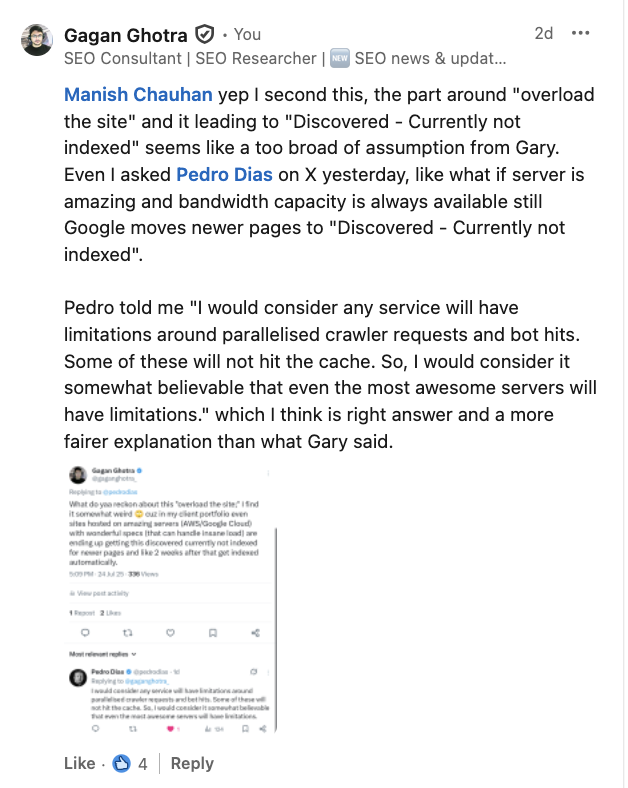

In the documentation Google defines “Discovered – currently not indexed” as “The page was found by Google, but not crawled yet. Typically, Google wanted to crawl the URL but this was expected to overload the site; therefore Google rescheduled the crawl. This is why the last crawl date is empty on the report.” and the same information word to word was presented in one of slide by Googler Gary Illyes at the event.

Regarding this I was somewhat confused? That’s why I asked Pedro Dias this.

What do yaa reckon about this “overload the site;” I find it somewhat weird 🙄 cuz in my client portfolio even sites hosted on amazing servers (AWS/Google Cloud) with wonderful specs (that can handle insane load) are ending up getting this discovered currently not indexed for newer pages and like 2 weeks after that get indexed automatically.

What do yaa reckon about this "overload the site;" I find it somewhat weird 🙄 cuz in my client portfolio even sites hosted on amazing servers (AWS/Google Cloud) with wonderful specs (that can handle insane load) are ending up getting this discovered currently not indexed for…

— Gagan Ghotra (@gaganghotra_) July 24, 2025

To which Pedro replied

I would consider any service will have limitations around parallelised crawler requests and bot hits. Some of these will not hit the cache. So, I would consider it somewhat believable that even the most awesome servers will have limitations.

I would consider any service will have limitations around parallelised crawler requests and bot hits. Some of these will not hit the cache. So, I would consider it somewhat believable that even the most awesome servers will have limitations.

— Pedro Dias (@pedrodias) July 24, 2025

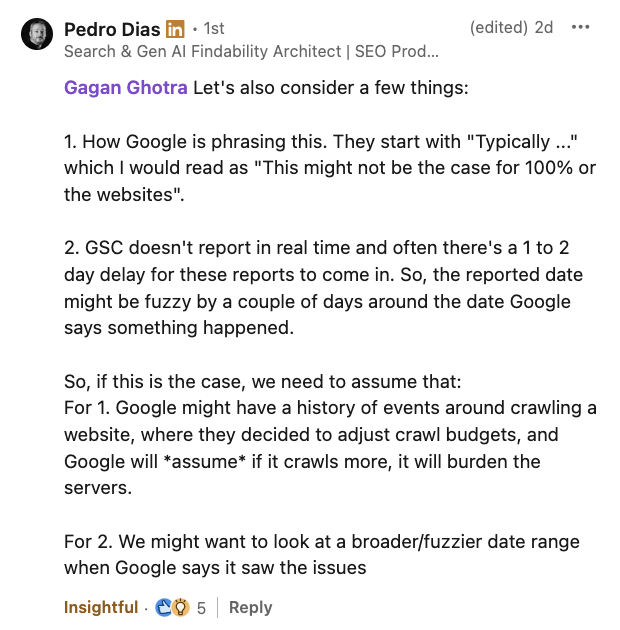

Also when I mentioned this on LinkedIn too! Pedro replied and said this.

Gagan Ghotra Let’s also consider a few things:

1. How Google is phrasing this. They start with “Typically …” which I would read as “This might not be the case for 100% or the websites”.

2. GSC doesn’t report in real time and often there’s a 1 to 2 day delay for these reports to come in. So, the reported date might be fuzzy by a couple of days around the date Google says something happened.

So, if this is the case, we need to assume that:

For 1. Google might have a history of events around crawling a website, where they decided to adjust crawl budgets, and Google will *assume* if it crawls more, it will burden the servers.

For 2. We might want to look at a broader/fuzzier date range when Google says it saw the issues

screenshots of those replies here!

unavailable_after robots meta tag

In the Google Search Central documentation Google defines unavailable_after robots meta tag as something which can be used for telling Google “Do not show this page in search results after the specified date/time”. And in the slide that Googler Gary Illyes presented at the event its mentioned as “Specifies a date and time to de-index this page”.

And instructions to use this are “After the specified date/time (RFC 850 format), the page will be removed from search results”. And for user case Gary mentioned “Perfect for pages about limited-time offers, events, or promotions that will expired”.

Also few years back while I was at SEO Collective monthly event in Melbourne, Peter Macinkovic (now SEO Lead at Easygo Gaming and per this Youtube video title he is SEO For The #1 Online Casino)

Also on LinkedIn when I asked him about this he replied and did a reply to a post from Dan too.

screenshots of those comments are here!

Rich Results caveats!

Googler William Prabowo in context of rich results and structured data said this

・It does not directly improve search rankings

・There’s no guarantee it will be displayed

・Google may generate rich results automatically, even without structured data

・Ongoing maintenance is required

Other coverages of this Event

- Google Search Central APAC 2025: Everything From Day 1, Day 2 and Day 3 (by Dan Taylor)

- Google says normal SEO works for ranking in AI Overviews and LLMS.txt won’t be used (by Barry Schwartz)

- X posts from Takuma Oka, Mieruca_kun and Kenichi Suzuki who were there at event (summarised as tables using Grok 4)

Leave a Reply